We're Sorry

Survail hardware version 2 is sold out

Version 3 is currently in closed beta with select clients. If you want to help us test, contact your rep. We are currently testing version 3 with multi-location clients.

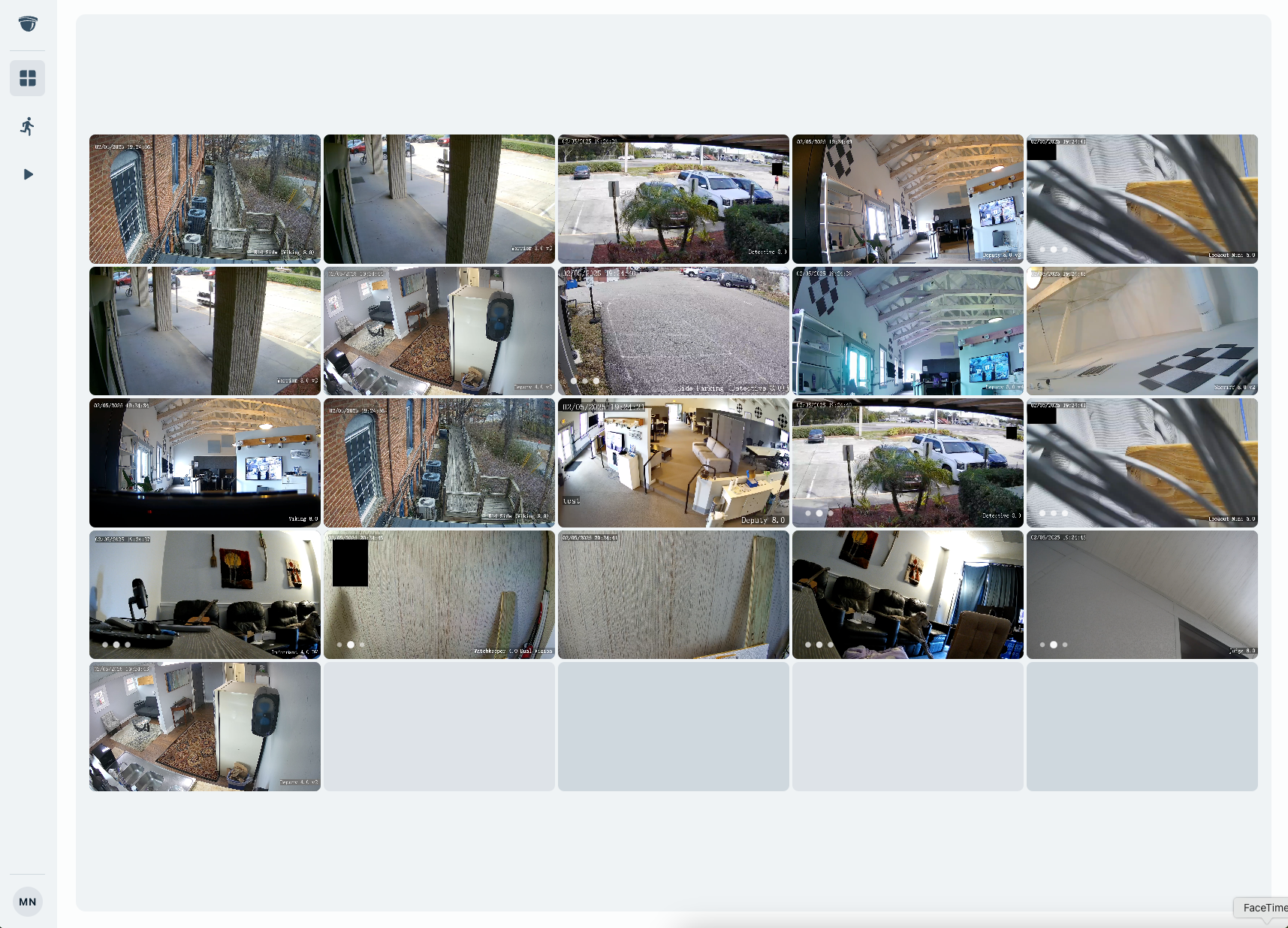

Version 3 Preview